(or what did we do with all the time we saved?)

3 Days, 3 Guys, and Some Graph Paper – The Early Days

I was introduced to commercial electromagnetic interference (EMI) testing in 1984. While working at Intergraph Corp. in Huntsville, Alabama, I got to work in the coolest looking building in town. It was an oversized pool cover that we called ‘The Bubble’. The bubble building was on a hillside that allowed the Open Area Test Site (OATS) ground plane to be a bit above the surrounding terrain, free of conductive building materials and large enough for a 10 meter ellipse, though we primarily used it for 3 meter testing.

In those days, Intergraph was producing large rack mounted computer systems that focused on high resolution graphics and the storage required to support the high detail needed for CAD/CAM and geographical mapping. For instance, each rack would contain three phase power distribution, some hard disk drives and a subsystem. Typically we needed racks for the CPU, the graphics processor, storage, and maybe a rack just for managing it all. The main interface was a line printer or alpha numeric monitor that would require at least one systems engineer to type in the boot sequence and all of the commands to get the system to eventually print a capital ‘H’ pattern to all display and I/O devices. Sometimes this took days to setup and run. All of this would support a separate graphics workstation that could have dual high resolution displays and a large digitizer table. All very cool stuff, but even though it was capable of mapping the world in 3D and color, all we got to see were scrolling Hs.

Once running, it was difficult to shut down and re-start just because it was the end of the work day. So, like all EMC guys, our real work did not start till after 5:00 pm on Friday and of course the system had to ship out on Monday! After over 26 years, I can see that not much has changed about the demands on the electromagnetic compatibility (EMC) community.

The test methodology was an exercise in consumption of time and money and the outpouring of expletives and sweat. We would have one person drive the spectrum analyzer and one person to record bogeys. Since this was an OATS, we were subject to the ambients found in that area. It was easy to discern most ambients by knowing all of the local licensed transmitters such as radio and TV broadcasts. We could also ‘tune in’ using the demod on the Quasi Peak adaptor to listen to the emission to determine if it was our EUT or not. So, before the EUT was powered on, we would do a manual scan through the spectrum and record any ambients that we could not identify. This could take over three hours.

After deciding on who was going to work the weekend, we would go through the same scanning technique with the Equipment under Test (EUT) fully operational with one person calling out frequency and amplitude and the other recording them in a table. We would try to cross reference the ambient list but sometimes they would sneak through. Ambients do not matter unless it is close to or over your limit line. To stop a test and shutdown the EUT was the last thing we wanted to do. Sometimes we could use a near field probe to validate the emission was an actual EUT product. Did I mention that we had to test this rack mounted system in multiple azimuths, two polarities and two antenna ranges. To ‘go back’ to a previous position was a horrifying contemplation!

The ‘Real Time Limit Line’ was a penciled in line on some graph paper that would alter the FCC Class A limit line to account for cable loss and antenna factor. The line would look like the contour of your factors with the limit line break points at 88 and 216 MHz. A ruler would help for those pesky middle frequencies.

‘Plotting’ along: The two taped sections of graph paper represent biconical and log periodic ranges. They are also serialized since each antenna has to have its own graph. You can see the breaks in the limit lines here as well. Contrast the full range in the automated plot. (Image courtesy of ETS-Lindgren)

The third person (boss) was required at the end of the testing when all data was captured on multiple sheets of paper. We would sit together with one person reading out loud each emission and amplitude while another person cross referenced the ambient list and the other person looked at the graph paper to see what the delta was. After hours of this, we would have a list of emissions that we would have to determine the final result using a calculator to get the absolute value and delta to the limit. As Kimball Williams mentions in his side bar article, the questioning of data accuracy and integrity was a major factor early Monday morning! If a failure or marginal emission was identified, we would have to crank up the beast, and look for worst case positioning of cables and peripherals then take pictures of it.

The final report would include the six highest emissions with their associated position, polarity, and antenna height along with the photos. We would use a Polaroid camera with a hood on it that would fit over the spectrum analyzer display to provide graphical data of the top six emissions, one at a time. Of course this was raw data, so we would place the display line where the corrected limit line should be. If a failure was found, the whole process was then called a pre-compliance engineering run.

Conducted emissions were similar since we did not have a chamber to block ambient signals from getting onto our power lines.

First Generation Automation

After years of doing it ‘that way’ we finally bought an HP 9300 computer to run a version of Hewlett Packard EMI software so that we could at least automate the spectrum capture and quasi peak measurements. Though this software was technically accurate and functional, it was not usable for us. We found that it did not have a good method for discriminating against ambients and it was too rigid with its process. At this time (around 1989, I think) we realized we could still use the computer connected to the analyzer to automate some processes. Any time savings was an improvement! Eventually the test time was reduced from as long as one week to about four hours. EUT’s also got smaller with less complex setups.

The computer used HP Basic, also known as Rocky Mountain Basic (RMB) operating system. I did not have any programing background but found it to be pretty easy to work with. As I became the resident code monkey on this system, I eventually had to make it work with FCC, VDE, and then CISPR 22 methodology and limits. By the time I left the company in 1997, it was mostly used for CISPR 22 testing.

The basic method that I used was to automatically tune the system from 30 MHz to 1 GHz in 10 MHz spans, then let the power of the HP 8566B analyzer run marker peak, then ‘next’ marker peak in each 10 MHz to find all peaks in each span. This could be done in the ambient mode and EUT mode. The computer would compare the ambient and EUT lists to provide a list of suspects. The suspects were determined by comparing the frequency and amplitude within something like 2x the resolution BW to see if they were the same. The suspect list erred on the ambient side so it would always provide more suspects for the operator to manually discriminate. The SW would also allow the operator to manually optimize any emission not on the list. The SW would then tune each suspect on the analyzer so that the operator could manually maximize the emission or discard it as an ambient. Once it was maximized, the SW would run the correction factors and determine the delta to the limit and capture the positioner information. At test completion, the SW would sort the emissions by margin to the limit and report the six highest with positioner information for the final report.

The report was a MS word template that had fields for the EUT variables and data. It was a very simple method, but is still effective to this day.

This type of computer/instrument setup spawned many independently developed acquisition programs across the industry known as ‘home brew’. Home brew is still used today and is the single largest market share for test software. Much of today’s home brew uses LabView.

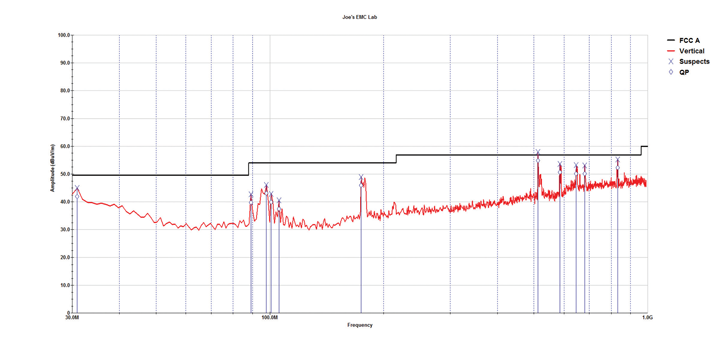

All the answers: This graph shows the same range on one sheet of paper compared to the graph paper which needed four sheets. This range also takes advantage of a broadband antenna that does not have a break at 200 MHz. This type of graph can be formatted to show EUT, Client, Date/Time and Comments so that you can see all you need to know about the test and EUT. (Image courtesy of ETS-Lindgren)

Click here for larger image.

Test Software Accuracy

The test software accuracy was dependent on raw data plus interpolated correction factors. The interpolation was validated to any ‘auditor’ by having them first do a manual calculation on any frequency, then let the automation do the same thing to show that it is correct. No standard says that you have to do a mathematical interpolation if you only have a graph of factors to eyeball. So interpolation by eye was and is usable though the human factor is involved. For some reason the EMC engineer seems to take the harsher side of the line than the customer. Anytime there was external test data on a EUT, we would do an A to B to compare results. If data is in dispute after the math has been validated, the next step is to look at normalized site attenuation data and antenna calibrations. Dan Hoolihan’s side bar article provides an auditor’s perspective on automated EMC test software when evaluating a lab for accreditation.

READ SIDEBAR: Software Validation Relative to EMC Lab Assessment by Daniel D. Hoolihan

Second Generation Automation

Commercially developed test software is available for almost any test application. Since EMC standards have evolved to include broader frequency ranges and more bandwidths – automation is a requirement! Industry auditors that protect the integrity of the lab affiliations must review software usage as well as lab processes and methodology. Home brew software is at a disadvantage during an audit since the revision control and the developer have to jump through a lot of hoops to show that it is and will be in the future an accurate method of data acquisition. If the person leaves the company someone else has to take over. What was an internal asset that was probably also a hobby now becomes a huge liability.

Commercial software is not scrutinized at the lab level since it is widely accepted and follows industry standards for revision control and development. National Instruments has developed a whole business by providing automation options for almost any industry. Their LabView program can be used as an open platform for controlling instruments of almost any type as long as it can communicate with a computer. Instrument communications have evolved from RS-232 and GPIB to USB and Ethernet. At this time, the communications protocol is not a great improvement in speed or accuracy, but it does reduce the cost of cables and eliminates the need for the GPIB and serial ports.

LabView is powerful but not easy for the typical lab engineer to master and maintain. HP VEE is another instrument centric software program that can be used to automate EMC testing. Like LabView, HP VEE can be used to develop commercial or home brew solutions. Other software is written in C++ which is very powerful, but requires a software engineer to develop and maintain.

Many new instruments are PC computers with an RF section and can provide a lot of internal automation. The drawback to these instruments is that they do not work well with external equipment such as positioners and when it goes out for calibration it is difficult to replace with another device. They would be adequate for bench testing and pre-compliance work. They are most suited for military testing since the instruments have built in military specified bandwidths/sweep times and allow for inclusion of correction factors.

Instrument vendors have developed software to take full advantage of their products. This is a good option if the lab is going to only use that vendor’s equipment and has replacements available when calibration time comes around. Other equipment can be used with optional drivers if available.

Immunity/Susceptibility Testing

Immunity/susceptibility testing is even more demanding in respect to the test process. It is nearly impossible to do an EN 55024 immunity calibration or test by hand, much less a 16 point field uniformity test. The leveling algorithms and control injected current while increasing the forward power to a calibrated level is a great benefit of automation.

READ SIDEBAR: Computer Assisted Testing (CAT) by Kimball Williams

Benefits of Automating

I am a private pilot and can fly hundreds of miles successfully with a compass and a watch. However, with the availability of GPS and autopilots it does not make sense to fly by the old school method just because I can. Technology allows us humans to manage complex, repetitive, mundane, and time consuming tasks so that we can reduce their outputs to our level. Assurance of data integrity is one of the things we love to do, but not at every data point. To answer the question of ‘What did we do with all that time we saved’? It seems that our industry and our bosses had no problem filling in that space and there was never a point that I can remember being bored because I finished a task 75% quicker than it would normally take. The benefit of automation is throughput and data integrity. Time saved always seems to take care of itself!

Future Outlook of EMC Test Automation

It seems that standards committees are always developing more rigorous methods for testing systems to be compatible with the electromagnetic environment (EME). Since the EME is likely to become more occupied with intentional and un-intentional sources, the requirements to measure and control them will need to change as well. Test methods must adapt to emulate the environment. We are still using a quasi-peak measurement that was developed to quantify the annoyance of interference on an AM radio. Now we need to be able to coexist with impulsive sources that are common to cell phones and other frequency hopping devices. Power systems use switching that can couple over to other devices via capacitive and inductive paths that will radiate above the ‘conducted’ frequency range but may meet the ‘radiated’ range limits and sensitive electronics need to be able to work in proximity to them.

This would certainly require new detection circuits similar to the demod circuits of the wireless electronics we use. Test frequency ranges and limit lines will also change. The automation will have to use multiple testing or multiple detection during the same test in order to make it feasible.

I do not think the manufacturers of the measurement instruments should design their internal computers for automation but more for signal processing. Automation software will have to adapt to the instrument capabilities and focus on control and data reduction so that us humans can interpret and communicate the complex data that the instruments will provide.

Interpreting and communicating the data means that we can display the data in terms that will indicate its compatibility to the EME. Test reports are required by standards to have a lot of information in them, but the end customer of the report typically only looks at the graphs, then the tables. So the graphs and tables will need to be very informative and relevant. ![]()

|

Joe Tannehill

has been working in the EMC field for over 27 years, starting with Intergraph Corp. in Huntsville, Alabama testing and designing graphics workstations and associated computing components. From there he worked at Gateway 2000 and at Dell designing laptop, desktop and enterprise systems. In 2005, Joe took a detour to the Department of Defense world working for Raytheon in Sudbury, Massachusetts where he was a design consultant with MIT’s Draper Labs. Longing for the heat of Texas he moved back to Austin to work with Motion Computing and then at ETS-Lindgren in his present position as an EMC engineer managing and supporting TILE! EMC test software, including organization of the annual TILE! Users Group meeting. This year’s meeting will be held on August 17 in Long Beach, California during the IEEE EMC Symposium. Joe is the inventor of two EMC related patents. He may be reached at joe.tannehill@ets-lindgren.com. |