The reliability of electronic technologies (including the software and firmware that runs on them) can become critical when the consequences of errors, malfunctions, or other types of failure include significant financial loss, mission loss, or harm to people or property (i.e. functional safety).

Electromagnetic interference (EMI) can be a cause of unreliability in all electronic technologies [1], so must be taken into account when the risks caused by malfunctioning electronics are to be controlled.

Most EMC engineers believe that the normal EMC tests do a good job of ensuring reliable operation, and indeed they do make it possible to achieve normal availability (uptime) requirements. However, the levels of acceptable risk in safety-related applications are generally three or more orders of magnitude more demanding, and applications where (for example) mission or financial risks are critical can be as demanding as safety-related applications, sometimes more so.

Unfortunately, most functional safety engineers leave all considerations of EMI to EMC engineers, with the result that – at the time of writing – most major safety-related projects do little more to control EMI than insure that the items of equipment used pass when tested to the relevant immunity test standards. As a result, safety risks due to EMI are not yet being effectively controlled.

The challenge for engineers is to demonstrate adequate confidence in the reliability of their designs in the operational electromagnetic

environment (EME).

The solution [2] is to use well-proven EMC design techniques plus risk assessment that shows the overall design achieves acceptable risk levels, all verified and validated by a variety of techniques (including EMC testing).

This article only addresses the issue of how to take EMI into account when performing a Risk Analysis.

The Nature of the Problem

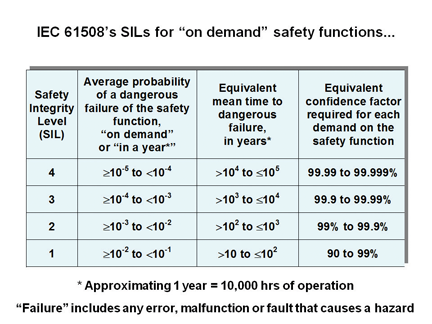

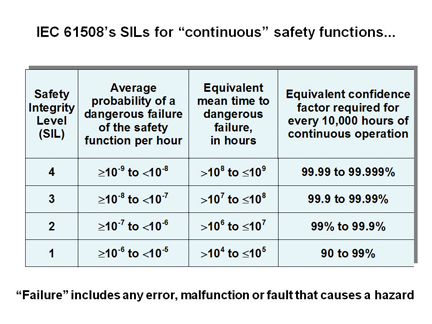

“High reliability”, “mission-critical”, “safety-critical”, or security applications might need to have a meantime to failure (MTTF) of more than 100,000 years (corresponding to Safety Integrity Level 4 (SIL4) in IEC 61508 [3], see Figures 1 and 2).

Mass-produced products (e.g. automobiles, domestic appliances, etc.) also require very low levels of safety risk because of the very large numbers of people using them on average at any one time.

It is usually very difficult to determine whether a given undesirable incident was caused by EMI, and the resulting lack of incidents officially attributed to EMI has led some people to feel that current EMI testing regimes must therefore be sufficient for any application. Indeed, it is commonplace to read words such as “….passes all contractual and regulatory EMC tests and is therefore totally immune to all EMI.”

However, as Ron Brewer says in [4]: “…there is no way by testing to duplicate all the possible combinations of frequencies, amplitudes, modulation waveforms, spatial distributions, and relative timing of the many simultaneous interfering signals that an operating system may encounter. As a result, it’s going to fail.”

Prof. Nancy Leveson of MIT says, in [5]: “We no longer have the luxury of carefully testing systems and designs to understand all the potential behaviors and risks before commercial or scientific use.”

The IET [6] states: “Computer systems lack continuous behavior so that, in general, a successful set of tests provides little or no information about how the system would behave in circumstances that differ, even slightly, from the test conditions.”

Finally, Boyer et al [7] say: “Although electronic components must pass a set of EMC tests to (help) ensure safe operations, the evolution of EMC over time is not characterized and cannot be accurately forecast.” This is one of the many reasons why any EMC test plan that has an affordable cost and duration is unlikely to be able to demonstrate confidence in achieving better than 90% reliability. The reasons for this are given in [4], [8], [9], section 0.7 of [10], and [11].

Since the confidence levels that are needed for functional safety compliance (for example) are a minimum of 90% for SIL1 in [3], 99% for SIL2, 99.9% for SIL3 and 99.99% for SIL4, it is clear that more work needs to be done to be able to demonstrate compliance with [3] and similar functional safety standards (e.g. [12] [13] and others such as IEC 61511 and IEC 62061), as regards the effects of EMI on risks.

The best solution at the time of writing is to use well-proven EMC design techniques to reduce risks, and to verify and validate them using a number of different methods, including immunity testing. Risk assessment is a vital part of such an approach, as required by [3]. Unfortunately, neither the IEC’s basic publication on Functional Safety [3], nor the basic IEC publication on “EMC for Functional Safety” [2], describe how to take EMI into account during risk assessment; although [10] – a practical guide based on [2] – does cover this.

This article is concerned with how to include EMI issues as part of a risk assessment (whether the risks are safety or other, e.g. financial), and is based on [10] and a paper I presented in 2010 [14]. I have also presented papers on assessing lifetime electromagnetic, physical and climatic environments [15], appropriate EMC design techniques [16], and verification and validation methods (including testing) [17].

As Prof. Shuichi Nitta says in [18]: “The development of EMC Technology taking account of systems safety is demanded to make social life stable.” I hope this article makes a contribution to this essential work, but there is much more yet to be done!

Risk Assessment

Most readers of IN Compliance will be very familiar with EMC, but perhaps not (yet) with Functional Safety, so a brief introduction to hazards and risks is probably a good idea.

What are “hazards” and “risks”?

A HAZARD is anything with potential to do HARM, and the hazard level is derived from the type of harm and its severity. For example, a bladed machine can cause harm by cutting skin, flesh, or even bone. We say it has a cutting hazard and define its severity as being either minor, serious, or deadly (other classifications are possible) depending on the maximum depth of cut and the respective parts of the anatomy.

A hazard has a probability of occurrence. The RISK is the product of the hazard level, its probability of occurrence, and a factor that takes into account the observation that, when they occur, not all hazards result in the same harm; for example if there is the possibility of avoidance or limitation. (Risk level = {Hazard level} × {Probability of the hazard occurring} × {Possibility of hazard avoidance or limitation}).

Other multiplying factors can also be applied, and often are. We may decide that a safety risk level should vary according to social factors, such as the type of person (for example, small children, pregnant women, healthy adults, etc.).

We could also consider a financial hazard to be the loss of a defined amount of money, and the financial risk to be the amount of the money multiplied by the probability of losing it.

EMI does not affect the hazards themselves but can affect their probability of occurrence, which is why EMI must be taken into account when trying to achieve acceptably low risk levels.

Nothing can ever be 100% reliable; there is always some risk. To insure that risks are not too high requires using hazard analysis and risk assessment, which takes the information on a system’s environment, design, and application and – in the case of [3] –

creates the Safety Requirements Specification (SRS) or its equivalent in other standards.

Using hazard analysis and risk assessment also helps avoid the usual project risks of over- or under-engineering the system.

The amount of effort and cost involved in the risk assessment should be proportional to the benefits required. These include: compliance with legal requirements, benefits to the users and third parties of lower risks (higher risk reductions), and benefits to the manufacturer of lower exposure to product liability claims and loss of market confidence.

Risk assessments are generally applied to simple systems

Modern control systems can be very complex and are increasingly often “systems of systems”. If they fail to operate as intended, the resulting poor yields or downtimes can be very costly indeed. Risk assessment – done properly – is a complex exercise in which competent and experienced engineers apply at least three different types of assessment technique to the entire system under review.

To risk-assess a complex system is a large and costly undertaking, but not usually necessary because the usual approach (e.g. [3]) is to insure the safety of the overall control system by using a much simpler and separate “safety-related system” that can be risk-assessed quite easily. Safety-related systems often use “fail-safe” design techniques – when an unsafe situation is detected, the control system is overridden and the equipment under control brought to a condition that prevents or mitigates the harms that it could cause.

For many types of industrial machinery, the safe condition is one in which all mechanical movement is stopped and hazardous electrical supplies isolated. The safe condition might be triggered, for example, by an interlock with a guard that allows access to hazardous machinery.

Such a fail-safe approach is, of course, useless in many life-support applications or anywhere where continuing operation-as-usual is essential, such as “fly-by-wire” aircraft. However, even in situations where a guard interlock or similar fail-safe techniques cannot be used – and the control system is too complex for a practicable risk assessment – it is still generally possible to improve reliability by means of simple measures that can be cost-effectively risk-assessed.

A typical approach is to use multiple (redundant [19]) control systems with a voting system so that the majority vote is used to control the system. Alternatively, control might be switched from a failing control system to another that is not failing (e.g. the Space Shuttle uses a voting system based on five computers [20]).

Specifying the acceptable risk level

For each identified hazard, the level of risk that is specified should be at least broadly acceptable. UK Health and Safety publications [21] and [22] provide very useful guidance on this, and on what may be tolerable under some circumstances.

Acceptable risk levels are culturally defined and not amenable to mathematical calculation. They must be specified before the design process starts. The engineering principle of establishing an acceptable risk level and then designing to achieve it is enshrined in the functional safety standards ([3], [12], [13], and others) and helps:

- manufacturers maximize their return on investment over the short, medium, and long terms by reducing their exposure to lawsuits and having a valid defense in case of a lawsuit,

- engineers and organizations abide by the IEEE’s ethical guidelines [23].

Acceptable risk levels for functional safety are generally provided by “Risk Charts” (or “Risk Graphs”), e.g. Annex D in Part 5 of [3], Annex D of [12], Section 7.4.5 of Part 3 of [13].

Reducing the risk from an identified hazard is performed by what [3] calls a “Safety Function”. [3] applies a SIL specification to each safety function, chosen according to the rules in [3] to achieve the specified risk level for the particular hazard being risk-reduced. So, for example, a safety-related system might provide three safety functions at SIL 2 and two safety functions at SIL 3.

Developed from Tables 2 and 3 of Part 1 of [3], Figures 1 and 2 show the reliability ranges covered by SILs. Examples of safety functions that operate on-demand include the braking system of an automobile and guard interlocks in industrial plant. Examples of safety functions that operate continuously, include the speed and/or torque control of automobile and other types of engines, and the motors in some machines and robots.

Figure 1: Safety systems that operate “upon demand”

Figure 2: Safety systems that operate continuously

There is no requirement for a safety function to employ electronic technologies. In many situations mechanical protection such as bursting discs, blast walls, mechanical stops, etc., and management (such as not allowing people nearby during operation), etc., and combinations of them, can help achieve a safety function’s SIL.

A SIL 3 specified safety function requiring, say, 99.95% reliability, could be achieved by employing three independent protection methods, each one of which achieves just 99.65%. All three, two, just one, or none of these protection devices or systems could use electronic technology. (Note that 99.95% reliability is seven times tougher than 99.65%.)

The most powerful EMC design technique for achieving a SIL is not to use any electronic or electromechanical technologies in the safety-related system!

A philosophical point

Many EMC test professionals, when faced with the information on hazards and risks above, say that because there is no evidence that EMI has contributed to safety incidents, this means the EMC testing done at the moment must be sufficient for safety. However, anyone who uses this argument is either poorly educated in matters of risk and risk reduction, or is hoping the education of their audience is lacking in that area [24].

The assumption that because there is no evidence of a problem, there is no problem, was shown to be logically incorrect in the 19th Century [25]; its use by NASA led directly to the Columbia space shuttle disaster [26]. Redmill [27] affirms: “Lack of proof, or evidence, of risk should not be taken to imply the absence of risk.”

EMI problems abound [28], but it is unlikely that incidents caused by EMI will be identified as being so caused, because:

- Errors and malfunctions caused by EMI often leave no trace of their occurrence after an incident.

- It is often impossible to recreate the EM disturbance(s) that caused the incident, because the EM environment is not continually measured and recorded.

- Software in modern technologies hides effects of EMI (e.g. EMI merely slows the data rate of Ethernet™ and blanks the picture on digital TV, whereas its effects are obvious in analogue telecommunications and TV broadcasting).

- Few first-responders or accident investigators know much about EMI, much less understand it, and as a result the investigations either overlook EMI possibilities or treat them too simplistically.

- Accident data is not recorded in a way that might indicate EMI as a possible cause.

If a thorough risk assessment shows EMI can cause financial, mission or safety hazards, then undesirable incidents due to EMI will occur. If the probability of the incidents caused by EMI is higher than acceptable risk levels, their rate should be reduced until they are at least acceptable (i.e. risk reduction).

Hazards can be caused by multiple independent failures

It is often incorrectly assumed that only single failures need to be considered (so-called: “single-fault safety”). However, the number of independent failures that must be considered as happening simultaneously depends upon the required level of safety risk (or degree of risk reduction) and the probabilities of each independent failure occurring.

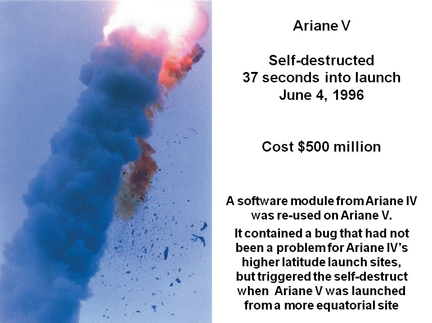

Not all failures are random

Many errors, malfunctions, and other faults in hardware and software are reliably caused by certain EMI, physical or climatic events, or user actions (for example, corrosion that degrades a ground bond or a shielding gasket after a time, an over-voltage surge that sparks across traces on a printed circuit board, etc.).

These are “systematic” errors, malfunctions, or other types of faults. They are not random, but may be considered “built-in” and so guaranteed to occur whenever a particular situation arises. An example is shown in Figure 3.

UK Health and Safety [30] found that over 60% of major industrial accidents in the UK were systematic, i.e., were “designed-in” and so were bound to happen eventually.

Figure 3: A systematic failure for Ariane V [29]

Not all failures are permanent

Many errors, malfunctions, or other types of failure can be intermittent, for example:

- poor electrical connections (a very common problem that can create false signals)

- transient interference (conducted, induced, radiated)

- “sneak” conduction paths caused by condensation, conductive dust, etc.

The operation of error detection and correction techniques, microprocessor watchdogs, and even manual power cycling can cause what would otherwise have been permanent failures to be merely temporary ones.

“Common-Cause” errors, malfunctions and other failures

Two or more identical units may be exposed to the same conditions at the same time, for example:

- ambient under- or over-temperature

- power supply under- or over-voltage

- EM disturbances (conducted, induced, radiated, continuous, transient, etc.)

- condensation, etc.

This can cause the units to suffer the same systematic errors, malfunctions, etc., which are known as “common-cause” failures.

So, using multiple redundant units [19] – a very common method for improving reliability to random errors, malfunctions or other types of failures – will not reduce risks of systematic failures if identical units, hardware, or software are used to create the redundant system.

Risk assessments need multiple techniques, and expertise

No one risk assessment technique can ever give sufficient “failure coverage”, so at least three and probably more different types should be applied to any design:

- at least one “inductive” or “bottom-up” method, such as FMEA [31] or Event-Tree

- at least one “deductive” or “top-down” method, such as Fault Tree Analysis [32] or HAZOP

- at least one “brainstorming” method, such as DELPHI or SWIFT

No risk analysis methods have yet been developed to cover EMI issues, so it is necessary to choose the methods to use and adapt them to deal with EMI. Successful adaptation requires competency, skills, and expertise in both safety engineering and real-life EMI (not just EMC testing).

Devices can fail at two or more pins simultaneously

EMI can cause two or more pins on a semiconductor device, such as an integrated circuit (IC), to change state simultaneously. An extreme example is “latch-up” – when all output pins simultaneously assume uncontrolled fixed states. This is caused by high temperatures, ionizing radiation, and over-voltage or over-current on any pin of an IC. The presence of any one of the three causes increases an IC’s susceptibility to latch-up due to the other two.

However, traditional risk analysis methods (e.g. FMEA) have often been applied very simplistically to electronics, for example I have seen (so-called) FMEA-based risk assessments on safety-critical electronics conducted by a major international manufacturer of automobiles that simply went through all of the ICs one pin at a time and assessed whether a safety problem would be caused if each pin was permanently stuck high or low. Also, this was the only failure mode identification method applied.

Reasonably foreseeable use/misuse

It should never be assumed that an operator will always follow the Operator’s Manual, or would never do something that was just “too stupid.”

Assessing reasonably foreseeable use or misuse requires the use of “brainstorming” techniques by experienced personnel, and can achieve better “failure coverage” by including operators, maintenance technicians, field service engineers, etc., in the exercise.

The Two Stages of Risk Assessment

When creating the SRS (or equivalent), the system has not yet been designed, so detailed risk analysis methods such as FMEA, FMECA, etc., cannot be applied. At this early stage, only an “Initial Risk Assessment” is possible, but there are many suitable methods that can be used and many of them are listed in 3.7 of [10].

During the design, development, realization, and verification phases of the project, detailed information becomes available on all of the mechanics, hardware and software. Appropriate risk analysis methods (such as FMEA) are applied to this design information – as it becomes available – to guide the project in real-time and to achieve the overall goals of the Initial Risk Assessment.

As the project progresses the Initial Risk Assessment accumulates more depth of analysis, eventually (at the end of the project) producing the “Final Risk Assessment” – a very important part of a project’s safety documentation. But it can only be completed when the project has been fully completed, and its real engineering value lies in the process of developing it during the project to achieve acceptable risk levels (or risk reductions) while also saving cost and time (or at least not adding significantly to them).

Incorporating EMI issues in Risk Assessments

The reasonably foreseeable lifetime EM environment is an important input to an EMI risk analysis process, as it affects the risk level directly. Because exposure to other environmental effects like shock, vibration, humidity, temperature, salt spray, etc., can degrade EM characteristics (and also faults, user actions, wear, and misuse), their reasonably foreseeable lifetime assessments are also important inputs.

- Many foreseeable environmental effects can occur simultaneously, for example:

- Two or more strong radio-frequency (RF) fields (especially near two or more cellphones or walkie-talkies, or near a base-station or broadcast transmitter).

- One or more radiated RF fields plus distortion of the mains power supply waveform.

- One or more radiated RF fields plus an ESD event.

- A power supply over-voltage transient plus conductive condensation.

- One or more strong RF fields plus corrosion or wear that degrades enclosure shielding effectiveness.

- One or more strong RF fields plus a shielding panel left open by the user

- Conducted RF on the power supply plus a high-impedance ground connection on the supply filter due to loosening of the fasteners that provide the bonding connection to the ground plane due to vibration, corrosion, etc.

- Power supply RF or transients plus filter capacitors that have, over time, been open-circuited by over voltages, and/or storage or bulk decoupling capacitors that have lost much of their electrolyte due to time and temperature.

Hundreds more examples could easily be given, and all such reasonably foreseeable events and combinations of them must be considered by the risk assessment.

Intermittent contacts, open or short circuits, can cause spurious signals just like some kinds of EMI, and are significantly affected by the physical/climatic environment over a lifetime. One example of this kind of effect is contact resistance modulated by vibration. This effect is called “vibration-induced EMI” by some.

EMI and intermittent contacts can – through direct interference, demodulation and/or intermodulation [11] – cause “noise” to appear in any conductors that are inadequately protected against EMI (perhaps because of a dry joint in a filter capacitor). “Noise” can consist of degraded, distorted, delayed or false signals or data, and/or damaging voltage or current waveforms.

When a “top down” risk analysis method is used, it should take into account that significant levels of such noise can appear at any or all signal, control, data, power, or ground ports of any or all electronic units – unless the ports are adequately protected against foreseeable EMI for their entire lifetime, taking into account foreseeable faults, misuse, shock, vibration, wear, etc. (For radiated EMI, the unit’s enclosure is considered a port.)

The noises appearing at different ports and/or different units can be identical or different, and can occur simultaneously or in some time-relationship to one another.

When a “bottom-up” risk analysis method is used, the same noise considerations as above apply, but in this they can appear at any or all pins of any or all electronic devices on any or all printed circuit boards (PCBs) in any or all electronic units – unless the units are adequately protected against all EMI over their entire lifetime taking into account foreseeable faults, misuse, etc., as before.

Similarly, the noises appearing at different pins or different devices, PCBs or units can be identical or different, and can occur simultaneously or in some time relationship.

It is often quite tricky to deal with all possibilities for EMI, physical, climatic, intermittency, use, misuse, etc., which is why competent “EMC-safety” expertise should always be engaged on risk assessments, to help insure all reasonably foreseeable possibilities have been thoroughly investigated.

If the above sounds an impossibly large task, the good news is that one does not have to wade through all of the possible combinations of EMI and environmental effects, faults, misuse, etc. There are design approaches that will deal with entire classes of EMI consequences and risk analysis techniques that determine if they are a) needed, and b) effective.

For example, at one design extreme there is the “EMI Shelter” approach: a shielded filtered enclosure with a dedicated uninterruptible power supply and fiber-optic datacommunications is designed and verified as protecting whatever electronic equipment is placed within it from the nasty outside environment for its entire life, up to and including a number of direct lightning strikes, earthquakes, flooding and nearby nuclear explosions if required. Several companies manufacture such shelters.

Door interlocks and periodic proof testing insure it maintains that protection for the required number of decades. Nothing special needs to be done to the safety system that is placed inside it. Of course, [3] (or whatever other functional safety standard applies) will have many requirements for the safety system, but EMI is taken care of by the EMI shelter. Validation of the finished assembly could merely consist of checking that the shelter manufacturer’s installation rules have been followed.

If the EMI shelter solution does not seem appropriate for your project, then how about a different extreme: error detection and fail-safe. It is possible to

design digital hardware to use data with embedded protocols that detect any possible interference, however caused. When such interference is detected, the error is either corrected or the fail-safe is triggered. Designing sensors, transducers and analogue hardware to detect any interference is not as immediately obvious as it is for data, but can be done.

Safety systems have been built that used this technique alone and ignored all immunity to EMI, but unfortunately they triggered their fail-safes so often that they could not be used. So, some immunity to EMI is necessary for adequate availability of whatever it is the safety system is protecting. Since passing the usual EMC immunity tests often seems to be sufficient for an acceptable percentage of uptime, this is probably all that needs to be done.

Conclusions

Any practicable EMC testing regime can only take us part of the way towards achieving the reliability levels required by the SILs in [3] or similar low levels of financial or mission risk.

Risk assessment is a vital technique for controlling and assessing EMC design engineering, but since no established risk analysis techniques have yet been written to take EMI into account, it is necessary for experienced and skilled engineers to adapt them for that purpose.

I hope that others will fully develop this new area of “EMI risk assessment” in the coming years. ![]()

References

- Van Doorn, Marcel,“Towards an EMC Technology Roadmap,” Interference Technology’s 2007 EMC Directory & Design Guide (2007): 182-193, www.interferencetechnology.com.

- IEC/TS 61000-1-2, Ed.2.0, 2008-11, “Electromagnetic Compatibility (EMC) – Part 1-2: General – Methodology for the achievement of the functional safety of electrical and electronic equipment with regard to electromagnetic phenomena,” IEC Basic Safety Publication (2008), http://webstore.iec.ch.

- IEC 61508 (in seven parts), “Functional Safety of Electrical/Electronic/Programmable Electronic Safety-Related Systems,” IEC Basic Safety Publication, http://webstore.iec.ch.

- Brewer, Ron, “EMC Failures Happen,” Evaluation Engineering Magazine (December 2007), www.evaluationengineering.com/features/2007_december/1207_emc_test.aspx.

- Leveson, Nancy, “A New Accident Model for Engineering Safer Systems,” Safety Science, Vol. 42, No. 4, (April 2004):. 237-270, http://sunnyday.mit.edu/accidents/safetyscience-single.pdf.

- IET, “Computer Based Safety-Critical Systems” (September 2008), www.theiet.org/factfiles/it/computer-based-scs.cfm?type=pdf.

- Boyer, Alexandre et al, “Characterization of the Evolution of IC Emissions After Accelerated Aging,” IEEE Trans. EMC, Vol. 51, No. 4 (November 2009): 892-900.

- Armstrong, Keith, “Why EMC Immunity Testing is Inadequate for Functional Safety,” 2004 IEEE International EMC Symposium Santa Clara, CA (August 9-13, 2004) ISBN 0-7803-8443-1, pp 145-149. Also: Conformity (March 2005) www.conformity.com/artman/publish/printer_227.shtml.

- Armstrong, Keith, “EMC for the Functional Safety of Automobiles – Why EMC Testing is Insufficient, and What is Necessary,” 2008 IEEE International EMC Symposium Detroit, MI (August 18-22, 2008) ISBN 978-1-4244-1699-8 (CD-ROM).

- “EMC for Functional Safety”, The IET, Ed. 1 (August 2008) www.theiet.org/factfiles/emc/emc-factfile.cfm or www.emcacademy.org/books.asp.

- Armstrong, Keith “Why Increasing Immunity Test Levels is Not Sufficient for High-Reliability and Critical Equipment,” 2009 IEEE International EMC Symposium Austin, TX (August 17-21, 2009), ISBN (CD-ROM): 978-1-4244-4285-0.

- “Medical devices – Application of risk management to medical devices,” ISO 14971 Ed. 2, www.iso.org

- Draft “Road Vehicles – Functional Safety,” ISO 26262 (10 parts), www.iso.org.

- Armstrong, Keith, “Including EMC in Risk Assessments,” 2010 IEEE International EMC Symposium Fort Lauderdale, FL (July 25-31, 2010), ISBN: 978-1-4244-6307-7 (CD-ROM).

- Armstrong, Keith, “Specifying Lifetime Electromagnetic and Physical Environments – to Help Design and Test for EMC for Functional Safety,” 2005 IEEE International EMC Symposium Chicago, IL (August 8-12, 2005), ISBN: 0-7803-9380-5.

- Armstrong, Keith, “Design and Mitigation Techniques for EMC for Functional Safety,” 2006 IEEE International EMC Symposium Portland, OR (August 14-18, 2006), ISBN: 1-4244-0294-8.

- Armstrong, Keith, “Validation, Verification and Immunity Testing Techniques for EMC for Functional Safety,” 2007 IEEE International EMC Symposium Honolulu, HI (July 9-13, 2007), ISBN: 1-4244-1350-8.

- Nitta, Shuichi, “A Proposal on Future Research Subjects on EMC, From the Viewpoint of Systems Design,” IEEE EMC Society Newsletter, Special 50th Anniversary Section: “The Future of EMC and the EMC Society” Issue 214 (Summer 2007): 50-57, http://www.emcs.org.

- Redundancy (Engineering), http://en.wikipedia.org/wiki/Redundancy_(engineering).

- IBM and the Space Shuttle, www-03.ibm.com/ibm/history/exhibits/space/space_shuttle.html.

- “Reducing Risks, Protecting People – HSE’s Decision-Making Process”, The UK Health & Safety Executive, ISBN:0-7176-2151-0, www.hse.gov.uk/risk/theory/r2p2.pdf.

- “The Tolerability of Risk from Nuclear Power Stations,” The UK Health & Safety Executive, www.hse.gov.uk/nuclear/tolerability.pdf.

- IEEE Ethical Code of Practice, The IEEE, http://www.ieee.org/about/ethics.html.

- Armstrong, Keith, “Absence of Proof Is Not Proof of Absence,” The EMC Journal, Issue 78 (September 2008): 16-19, from the archives at www.theemcjournal.com.

- Anderson, Anthony, Presentation to the 20th Conference of the Society of Expert Witnesses at Alexander House, Wroughton, UK (May 16, 2008) www.sew.org.uk, www.antony-anderson.com.

- Petrowski, Henry, “When Failure Strikes,” New Scientist (July 29, 2006): 20, www.newscientist.com/channel/opinion/mg19125625.600-the-success-that-allows-failure-to-strike.html.

- Redmill, Felix, “Making ALARP Decisions,” Safety-Critical Systems Club Newsletter Vol. 19 No. 1 (September 2009): 14-21, www.safety-club.org.uk.

- “The First 500 Banana Skins,” Nutwood UK (October. 2007), from www.emcacademy.org/books.asp (or read on-line at www.theemcjournal.com).

- “Ariane V,” http://en.wikipedia.org/wiki/Ariane_5.

- “Out of Control – Why Control Systems Go Wrong and How to Prevent Failure,” The UK Health & Safety Executive, ISBN: 0-7176-2192-8, www.hse.gov.uk/pubns/priced/hsg238.pdf.

- IEC 60812:2006, Analysis Techniques for System Reliability – Procedure for Failure Mode and Effects Analysis (FMEA).

- IEC 61025:2006, Fault Tree Analysis (FTA).

Acknowledgment

Many thanks to Doug Nix of Compliance InSight Consulting, Inc., for improving my text.

|

Keith Armstrong After working as an electronic designer, then project manager and design department manager, Keith started Cherry Clough Consultants in 1990 to help companies reduce financial risks and project timescales through the use of proven good EMC engineering practices. Over the last 20 years, Keith has presented many papers, demonstrations, and training courses on good EMC engineering techniques and on EMC for Functional Safety, worldwide, and also written very many articles on these topics. He chairs the IET’s Working Group on EMC for Functional Safety, and is the UK Government’s appointed expert to the IEC committees working on 61000-1-2 (EMC & Functional Safety), 60601-1-2 (EMC for Medical Devices), and 61000-6-7 (Generic standard on EMC & Functional Safety). |